Every now and then, it’s useful to get a sense of assurance about the code you’re writing. In fact, it might be a primary goal of your organization to have functional code. Who knows?

Although I began development of Tandem Exchange following a test-first development process, the pace of change was too rapid. It’s not that I didn’t appreciate the value of testing. At the very beginning, I did implement a large number of tests. It’s just that those tests were written against soon-to-be-obsolete code and I didn’t have the time to develop new functionality and write unit tests simultaneously. Before the prototyping phase had ended, I learned the hard way that it didn’t really make sense to write many of those tests, when such a huge fraction of early functional code ended up in the dustbin.

Once things settled down, I started to leverage the Python code-coverage module alongside newly-written unit tests, made simple by using the nose test runner, which is a fantastic tool for test auto-discovery.

I then added the nose test runs to the development-site crontab, to generate coverage and unit test statistics on a regular basis:

@daily /usr/local/bin/python2.7 /path/to/nosetests -v --with-coverage \

--cover-package=exchange --cover-erase \

--cover-html --cover-html-dir=/path/to/webdir/coverage --cover-branches \

exchange.search_tests exchange.models_tests

All you have to do is specify a handful of extra options to the nosetests command line, it’s practically a freebie. Especially useful are the --cover-html and --cover-html-dir options, which tells nosetests to place the coverage reports in a specific directory.

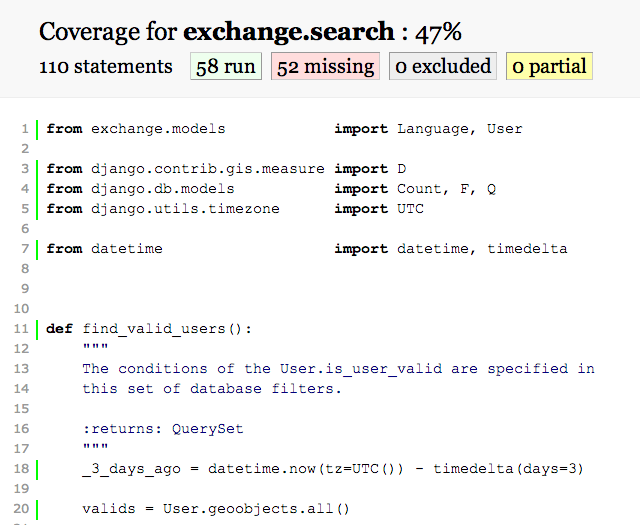

In our case, I created a directory on the webhost, where I can log in and check the report results, which look something like:

The coverage reports show which Python statements (lines) have been exercised by the unit tests that have been run. Green lines have been run at least once, red lines have not been run, yellow lines indicate that not all of the conditions of a branch have been tested. (i.e. If you have an “if” statement, you have to test it for both True and False conditions, otherwise known as Modified Condition / Decision Coverage.) Note, however, that a coverage test does not prove that a piece of code behaves the way you expect, only that it has been run. The unit tests are the bits exclusively responsible for proving behavior.

In any case, I’ve already isolated two issues through the unit tests and am now assured that they will never come back. And as the percentage of statements covered by unit tests continues to increase, I’m sure any remaining issues will shake out. Which is the whole point, isn’t it?