The Problem

When using Visual Studio Code with a password-protected SSH key (as they should always be), it got on my nerves that VSCode would ask me for that password every time it tried to connect to a Remote SSH Session.

Any time I tried to open a remote folder or restored a previous set of editing windows connected to remote folders, it would ask once per windows for my SSH key password.

This was on Windows 7, where there is no OpenSSH Client package built into the operating system as with Windows 10. If you’ve got a corporate laptop, this could be out of your control, which was my case. But this also happened with me on my private Windows 10 machine.

After installing Git Bash, you get a MINGW64 copy of ssh-agent that works fine with Visual Studio Code, and you can set up .bashrc to share a single copy of ssh-agent across all instances of Git Bash that you start.

And, you can export the SSH_AGENT_PID and SSH_AUTH_SOCK variables from Git Bash straight into the User Environment variables in your Windows session using the setx command.

The Solution

.bashrc

env=~/.ssh/agent.env

agent_load_env () { test -f "$env" && . "$env" | /dev/null ; }

agent_start () {

(umask 077; ssh-agent >| "$env")

. "$env" >| /dev/null ; }

agent_load_env

# agent_run_state: 0=agent running w/ key; 1=agent w/o key; 2= agent not running

agent_run_state=$(ssh-add -l >| /dev/null 2>&1; echo $?)

if [ ! "$SSH_AUTH_SOCK" ] || [ $agent_run_state = 2 ]; then

echo "Starting ssh-agent and adding key"

agent_start

ssh-add

echo "Setting Windows SSH user environment variables (pid: $SSH_AGENT_PID, sock: $SSH_AUTH_SOCK)"

setx SSH_AGENT_PID "$SSH_AGENT_PID"

setx SSH_AUTH_SOCK "$SSH_AUTH_SOCK"

elif [ "$SSH_AUTH_SOCK" ] && [ $agent_run_state = 1 ]; then

echo "Reusing ssh-agent and adding key"

ssh-add

elif [ "$SSH_AUTH_SOCK" ] && [ $agent_run_state = 0 ]; then

echo "Reusing ssh-agent and reusing key"

ssh-add -l

fi

unset env

This is a modified version of the GitHub suggestion (“Working with SSH key passphrases – GitHub Docs“)

setx is a Windows command that sets User Environment variables in HKEY_CURRENT_USER, which are then used by all newly-started processes:

“On a local system, variables created or modified by this tool

will be available in future command windows but not in the

current CMD.exe command window.”

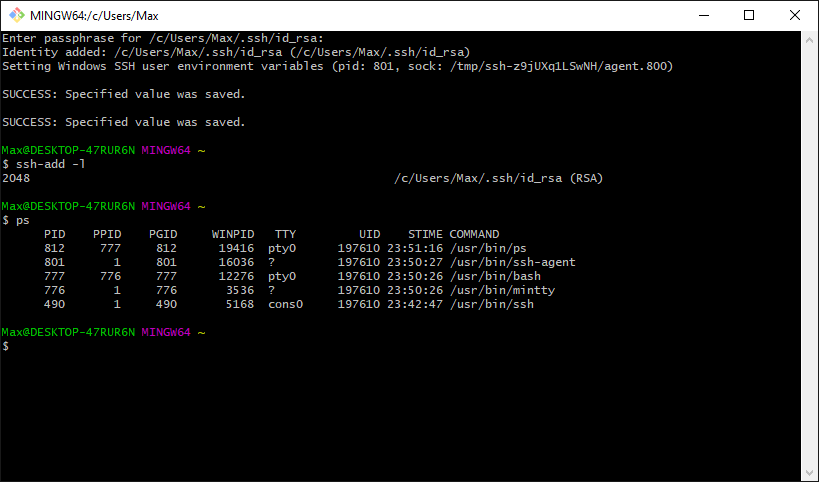

Starting Git Bash, you’ll see:

Every Git Bash window you open after that will share the same ssh-agent instance.

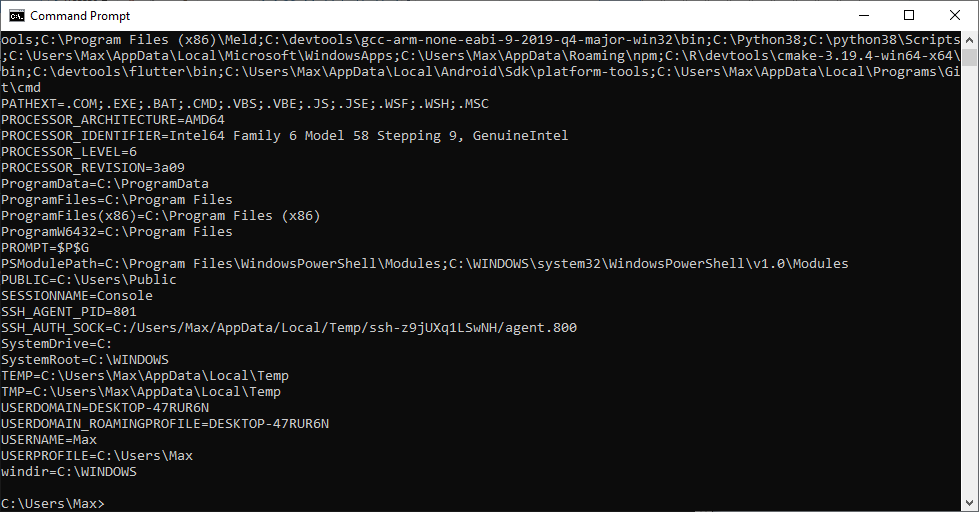

Starting Command Prompt, you’ll see:

This shows that the SET_AGENT_PID and SET_AUTH_SOCK variables were set.

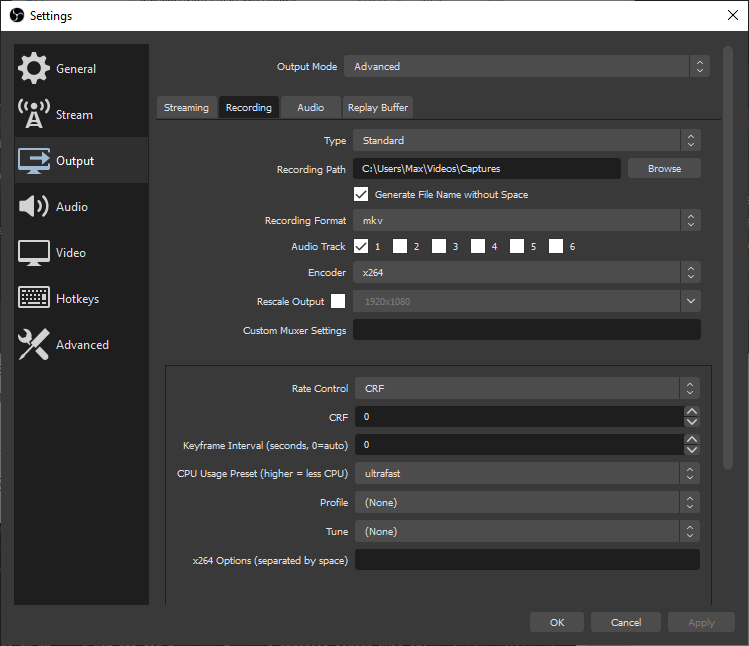

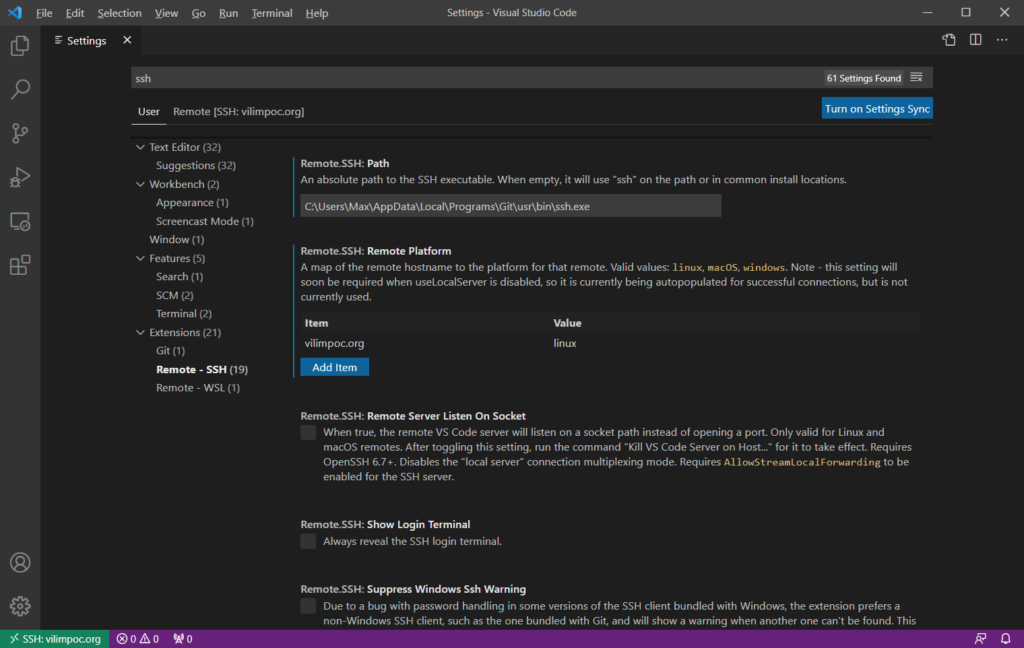

VSCode

Once you have the .bashrc set up and have opened up at least one Git Bash window, all Remote sessions will reuse the currently-running ssh-agent and you shouldn’t be asked for the key passphrase again.

But you need to solve one final problem:

Problem: VSCode keeps asking me “Enter passphrase for key”.

Solution: You have to use the ssh.exe from the Git Bash installation, e.g. C:\Users\Max\AppData\Local\Programs\Git\usr\bin\ssh.exe:

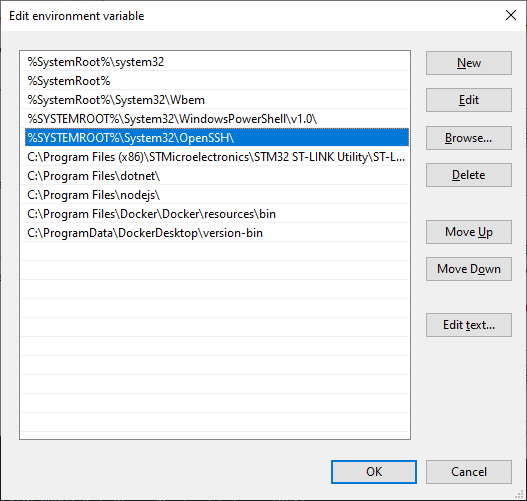

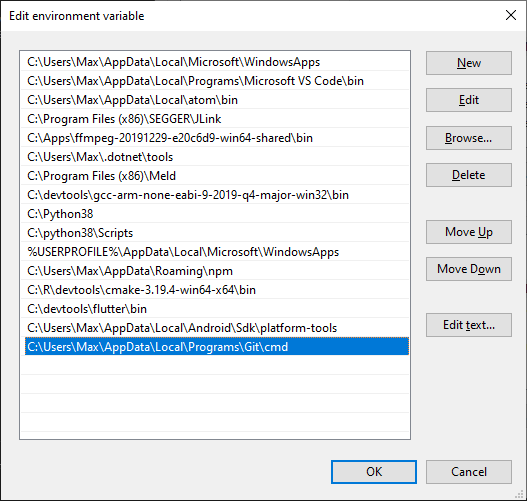

The reason is due to the fact that the Windows built-in OpenSSH is executed ahead of the Git Bash SSH due to the PATH order.

Because of all the problems I had with using Windows OpenSSH, it may even be worth completely removing it.

You can do this by running Windows PowerShell as Administrator and running:

Remove-WindowsCapability -Online -Name OpenSSH.Client~~~~0.0.1.0

and

Remove-WindowsCapability -Online -Name OpenSSH.Server~~~~0.0.1.0