Sometimes when you’re trying to figure out an issue in a Django production environment, the default exception tracebacks just don’t cut it. There’s not enough scope information for you to figure out what parameters or variable values caused something to go wrong, or even for whom it went wrong.

It’s frustrating as a developer, because you have to infer what went wrong from a near-empty stacktrace.

In order to be able to produce more detailed error reports for Django when running on the production server, I did a bit of searching and found a few examples like this one, but rewriting a piece of core functionality seemed a bit weird to me. If the underlying function changes significantly, the rewrite won’t be able to keep up.

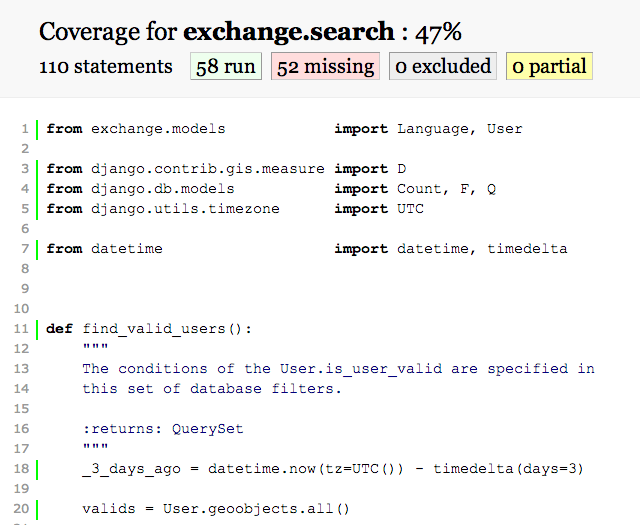

So I came up with something different, a mixin function redirection that adds the extra step I want (emailing me a detailed report) and then calls the original handler to perform the default behavior:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

# Improve the error message output, so I can actually debug / figure out

# what the hell went wrong during postmortems of HTTP 500 Server Errors.

#

# Based on http://djangosnippets.org/snippets/2244/

#

# Modifies the mixin in a similar way, but doesn't rewrite the whole thing.

# Just specifies additional behavior then calls to the saved handler.

from django.core.handlers.base import BaseHandler

def better_uncaught_exception_emails(self, request, resolver, exc_info):

"""

Processing for any otherwise uncaught exceptions (those that will

generate HTTP 500 responses). Can be overridden by subclasses who want

customised 500 handling.

Be *very* careful when overriding this because the error could be

caused by anything, so assuming something like the database is always

available would be an error.

"""

from django.conf import settings

from django.core.mail import EmailMultiAlternatives

from django.views.debug import ExceptionReporter

# Only send details emails in the production environment.

if settings.DEBUG == False:

reporter = ExceptionReporter(request, *exc_info)

# Prepare the email headers for sending.

from_ = u"Exception Reporter <your-errors@domain.com>"

to_ = from_

subject = "Detailed stack trace."

message = EmailMultiAlternatives(subject, reporter.get_traceback_text(), from_, [to_])

message.attach_alternative(reporter.get_traceback_html(), 'text/html')

message.send()

# Make sure to then just call the base handler.

return self.original_handle_uncaught_exception(request, resolver, exc_info)

# Save the original handler.

BaseHandler.original_handle_uncaught_exception = BaseHandler.handle_uncaught_exception

# Override the original handler.

BaseHandler.handle_uncaught_exception = better_uncaught_exception_emails

|

Note that by using this code, you do end up with two emails: the usual generic error report and the highly-detailed one containing details usually seen when you hit an error while developing the site with settings.DEBUG == True. These emails will be sent within milliseconds of one another. The ultimate benefit is that none of the original code of the Django base classes is touched, which I think is good idea.

Another thing to keep in mind is that you probably want to put all of your OAuth secrets and deployment-specific values in a file other than settings.py, because the values in settings get spilled into the detailed report that is emailed.

One final note is that I am continuously amazed by Python. The fact that first-class functions and dynamic attributes let you hack functionality in, in ways the original software designers didn’t foresee, is fantastic. It really lets you get around problems that would require more tedious solutions in other languages.