What’s the difference between these two laptops?

Besides 7 years and a few generations of processor technology, surprisingly little. The Dell was born in 2004, the Mac was born in 2011. There’s USB 2.0, Gigabit Ethernet, and 802.11abg on the Dell thanks to eBayed expansion cards, so the connectivity options are roughly equivalent. There’s better power management for sure on the latest Mac models, more/multiple cores, multithreading, more Instructions Per Cycle (IPC) at the chip level, and built-in video decoding, among various other technical improvements.

But does the laptop on the right actually help me get work done faster than the laptop on the left?

Probably not.

And therein lies a major source of Intel’s and Microsoft’s year-on-year growth problems. Their chips, and in fact, the whole desktop PC revolution, have produced machines that far outstrip anyone’s average needs. The acute cases, playing games, folding proteins, making and breaking cryptography, and so on, will always benefit from improved speed. But the average web surfer / Google Docs editor / web designer / blogger, probably could make due with a machine from 2004.

In fact, one might be willing to say that the old computer is actually better for getting work done, because it consumes such a huge fraction of CPU performance when playing YouTube videos. There’s no room for the faux, 10-way “efficient multitasking” people believe they are capable of doing these days.

Which means I can essentially single-task or dual-task (write some code, refresh the browser), and keep my attention focused on fewer tasks. I’m constrained in a useful way by the hardware, and I can use those constraints to my advantage.

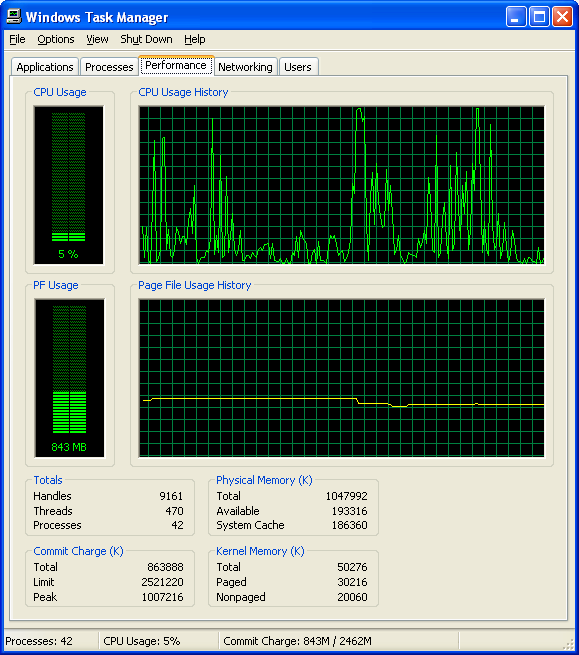

The software stack remains the same (one of the great achievements of the Windows era): Google Chrome, PuTTY, iTunes, maybe a Firefox window open in there. All of this runs just fine on a Pentium 4-M at 2.0Ghz and 1GB of RAM (probably about the performance of the Nexus 7, a 4 + 1-core ARM processor w/1GB of RAM), and the CPU is still over 90% idle. When Intel announces that it’s building chips with ten times the performance, that just means the processor is ten times more idle on average, big whoop.

What I’m saying is: if all the average user needs is the compute-equivalent of a laptop from 2004, then that’s effectively what a current smartphone or tablet is.

But then what I’m also asking is: Why can’t I do as much work on a smartphone from 2013, as I can on a laptop from 2004?

I believe that there are a number of huge shortcomings in the phone and tablet world, which still cause people to unnecessarily purchase laptops or desktops:

- The output options are terrible. While it’s nice to have a Retina display with what is essentially mid-1990s, laser-printer dots-per-inch resolution, you can still only fit so much text into a small space before people can’t read it anyway. It doesn’t matter how sharp it is, the screen is just too damn small. What would be nicer for anyone buying these expensive, output-only devices, would be the ability to hook any tablet up to a 24″ HD monitor and then throw down some serious work or watch some serious Netflix.

- Why can’t I, for the love of God, plug a USB memory stick into one of these things? It’s just a computer, computers have USB ports, ergo, I ought to be able to plug a memory stick into a tablet. Whose artificial constraints and massively egocentric desire to organize all of the world’s information is keeping me from using a tablet to quietly organize mine in the comfort and literal walled-garden of my own home?

- There’s no Microsoft Excel on a tablet, and Google Sheets is obviously not a useful replacement. But that is a moot point, because…

- …the input options for tablets are terrible, there are no sensible input options for anyone wanting to do more than consume information. Content creation, especially when it comes to inputting data, is impossible. Keyboards-integrated-into-rubbery-crappy-covers will not help here. So tablets are still only useful for 10-foot view user interfaces, and things that don’t require much precision. Sure, Autodesk and other big-league CAD companies have built glorified 3D model viewers for tablets, but note how many of the app descriptions include the word “view” but not the words “edit” or “create”. No serious designer would work without keyboard shortcuts and a mouse or Wacom tablet.

- The walled-garden approach to user-interface design and data management in the cloud is terrible. There are multiple idioms associated with saving and loading data, multiple processes, there is no uniformity at the operating-system level. By killing off the single Open dialog coupled to hierarchical storage and user-defined storage schemas in the form of endlessly buried directories, it’s very hard for a user to get a sense of the scale and context of their data. People have their own ways of navigating and keying in on their data, but the one-size-fits-all cloud model rubs abrasively right up against that. It seems to me that eliminating the Open dialog was more an act of dogma than of use-testing, and that it’s almost heresy (and in fact, essentially coded into OS X (via App Sandboxing), iOS, and Android’s DNA now) to wish for the unencumbered dialog to return. Cordoning data off into multiple silos is akin to saving data on floppies or that old stack of burned CDs you might have laying about somewhere: it’s not really fun to have to search through them all to find what you were looking for.

Unfortunately, by refusing to consider the above points, no one’s currently thinking about using these phones and tablets for what they really are: full-fledged desktop/laptop replacements, particularly when combined with things like Nvidia’s GRID technologies for virtualized 3D scene rendering.

The market for computing devices is now very strange indeed. Phablet manufacturers sell input/output-hobbled devices for more than full-fledged machines and even command huge price premiums for them. Meanwhile, the old desktop machine in the corner is still more than powerful enough to survive another processor generation, but it’s the old hotness and no one really wants to use it. And no one is making real moves to make the smartphone the center of computing in the home, even though that is where most of the useful performance/Watt research and development is going.

So here’s a simple request for the kind of freedom to use things in a way the manufacturers never anticipated: I’d like to have text editing on a big monitor work flawlessly out-of-the-box with my next smartphone, using HDMI output and a Bluetooth keyboard. (Why aren’t we even there yet? It took several versions of Android and several ROM flashes before I could even get it working, and even now the Control keys aren’t quite working.)

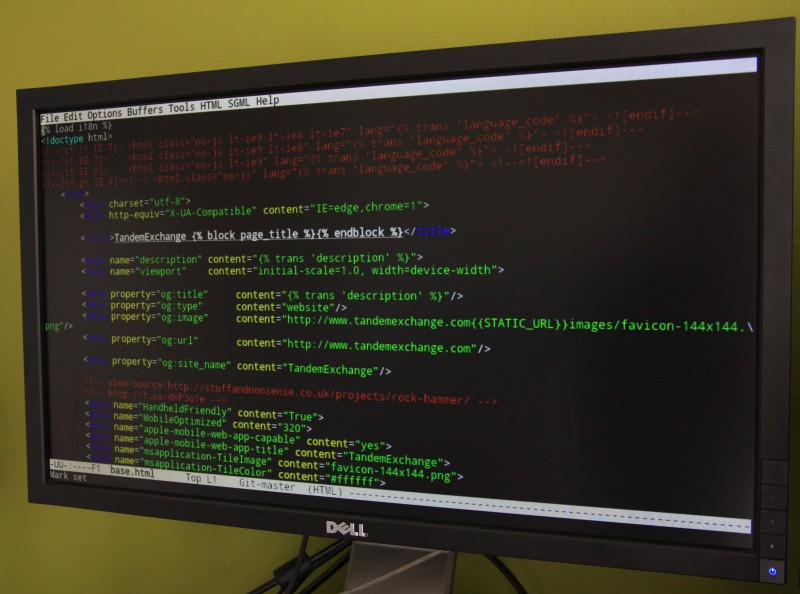

Add in Chrome / Firefox on the smartphone and you have enough of a setup for a competent web developer to do some damage, and perform most of the write, refresh, test development cycle. And be able to do this anywhere via WiFi or HSDPA/LTE connections (a fallback data connection apparently being necessary if you ever want to do work at this place, snarkiness aside).

There’s so much more usability that could be enabled if the input, output, and storage subsystems of the major smartphone OSes were ever made to be unconstrained.

Such a shift to smartphone primacy is almost presently possible and I can only wonder whether in a few years’ time (2 – 3 phone generations) this scenario will become the norm, but I’m not holding my breath. The companies involved in the current round of empire building (including some of the companies involved in the last) are seemingly too concerned with controlling every part of the experience to allow something better to reach us all.

Everything you’ve written is true, and I’m certainly intrigued by your phone driving the large monitor and being able to edit source code on it with a keyboard. But I think we are many, many years (perhaps generations) away from “real” computers being replaced by tablets/smartphones, for the simple reason that you mentioned – input and output.

Let’s face it, tablets and smartphones are toys. iPads are great for the casual internet user that you mention (and yes, people whose jobs involve little more than email). But nobody who need a computer for anything more than that can get by with the small screen and touchscreen input. The advantage to these things (other than a “cool” experience, e.g. playing certain games that just feel good with a touchscreen UI) is their portability. What I can fit in my pocket on my iPhone is amazing. But any serious work (e.g. coding) requires a lot more. I see a few possible solutions, all of which are very far off:

1) technology – tiny keyboards become close enough to “real” keyboards. Maybe this means that I can roll out a paper thin keyboard that gives me the same tactile feedback that my $5 Dell keyboard does. Output-wise, some Google Glass-like display that simulates a 30 inch monitor, or maybe a tiny projector. No matter what, to provide an experience comparable to coding with real large monitors and a real keyboard, we are many years away. Maybe centuries from now I will be able to fit a tiny 3D printer in my pocket that will fabricate my keyboard and monitors instantly on demand. Or maybe engineers will code by thinking in the year 2350. It’s easy to think of technological solutions, but I think we’d all agree anything reasonable is very far away.

2) infrastructure – instead of holding everything I need in my pocket, maybe I can plug in. My office, home, and coffee shop all have monitors and keyboards (just like docking stations for laptops). This is easy, but expensive. I/O would have to be standardized. Phones that can interface with this stuff would be more expensive than ones that don’t, so then you’d have an electric car problem (people would buy more electric cars if there were more places to charge, and people would build more places to charge if there were more electric cars).

So, #1 is extremely difficult, and #2 is extremely expensive. #1 will eventually come. But I’d argue that #2 has no real advantage over what we currently have. What’s so bad about having a desktop machine at my office if I’m going to have all of this other hardware anyway? Is it that hard to carry an ultra-light laptop around? And what’s wrong with a cell phone being a glorified toy anyway?

I think it’s just a matter of perception. Tablets and smartphones have traditionally been considered toys because they’ve been so I/O constrained since inception.

There’s no doubt in my mind that with a quad-core Nexus 4, I could just as easily write apps for the phone, on the phone, as I can on a laptop. I’d even get the benefit of not having to do remote debugging (a real joy, esp. when using wrapped native code via JNI on Android), even though the Google and Apple tools for doing that are pretty good. It’s simply the artificial restrictions put in place that prevent people from thinking Xcode or other desktop apps could run on an iPhone, where I’m sure they could. Where speed is concerned, this is also mostly a perception issue. It’s a problem on desktops as well that almost every program ever written doesn’t bother to do calculations (compilation, for instance) until explicitly asked, where a smart piece of software could just be caching the results of calculations (object files / linked executables, or whatever) and displaying the results when requested.

Sure, people who write code might need more screen space, but more compute power? Probably not. And in any case that stuff can be offloaded. In two to three generations’ time, the tablet will have at least as much performance as the Core 2 desktop I still write code on.

I think you have the likelihood and utility of point 1 and point 2 reversed.

With respect to point 1, the possible technologies of the future are nice to think about but have no bearing on the fact that I almost got this to work with today’s technology. I’m not sure why it would be necessary to discard existing I/O and display technologies as usable solutions right now, while hoping for vaporware technologies to make a completely different experience somehow possible years down the road. Sure, people might use a successor to the Oculus Rift to view a virtual wall of monitors with head-tracking, but that’s a completely different use case. Were it possible to ditch their desktops and laptops for an x86-compatible smartphone with enough I/O ports and Windows right now, I don’t see what company wouldn’t do it for the power savings alone. This is what Microsoft should have done with the Windows 8 transition, but Ballmer would be loathe to piss off the OEMs, who are all getting slaughtered now anyway and make more margin out of their smartphone / tablet divisions to boot.

With respect to point 2, the infrastructure’s already there. I’ve got monitors, and I’ve got keyboards. The phone I attempted this with is 2 years old, and purchased off eBay for just north of 100 euros. Interfacing isn’t a problem, if phone manufacturers would just bother to add 50-cent micro-HDMI connectors to their devices. Most of the System-on-Chip packages have multiple display outputs built into the silicon anyway, or have the option to enable this in the silicon for a pittance. Cost really isn’t a factor here at the quantities already being manufactured.

Ultimately, those other devices don’t go away completely, but smartphones and tablets become more capable for effectively no cost. What doesn’t make sense to me is the continuous injection of new hardware features into smartphone silicon, where it’s not even possible to truly tap the potential of that hardware, because device manufacturers hobble their devices so badly. At that point the original question can be raised again: What’s the point of putting desktop-class performance into a smartphone, if you can’t use the smartphone as a desktop-replacement?

Thanks for the response. I completely agree that computing-wise, we don’t need more power for most tasks.

I should have made it clear – my goal is maintain the portability of the smartphone (which is really its only real advantage over a laptop or desktop, right?). So while you have the monitors and keyboards, but do they have them everywhere? Maybe home and work is enough (assuming you’re right about companies outfitting their offices to support this setup) but I would want to be able to use the thing on planes, trains, coffee shops, etc. – and for that reason it’s hard to see a real laptop being completely replaced. That was why I mentioned point 1 – because with the right (vaporware) technology, you really could use this tiny thing everywhere (with the same experience as today’s notebooks). That is the ultimate goal, I think.

But in any case, I do think I wasn’t getting your overall point – that they are making them more powerful without enhancing the usability. I guess the answer to your final question is “why not?”